1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

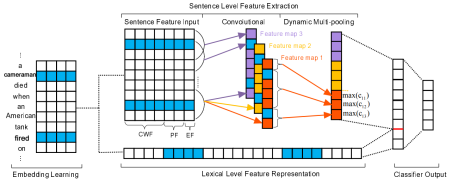

| from keras.models import Model

from keras.regularizers import l2

from keras.optimizers import Adam

from keras.initializers import Constant

from keras import backend as K

from keras.layers.core import Lambda

from keras.layers import Input, Embedding, Conv1D, MaxPooling1D, TimeDistributed, Flatten

from keras.layers import Dropout, Dense, Concatenate, Reshape, Multiply

class DMCNN:

def __init__(self, max_sequence_length, embedding_matrix,

window_size=3, filters_num=200, pf_dim=5, invalid_flag=-1,

output=8, l2_param=0.01, lr_param=0.001):

self.steps = max_sequence_length

self.embedding_matrix = embedding_matrix

self.window = window_size

self.filters = filters_num

self.dim = embedding_matrix.shape[1]

self.pf_dim = pf_dim

self.invalid_flag = invalid_flag

self.output = output

self.l2_param = l2_param

self.model = self.build()

self.model.compile(loss='categorical_crossentropy', optimizer=Adam(lr=lr_param, beta_1=0.8),

metrics=['accuracy'])

def build(self):

cwf_input = Input(shape=(self.steps,), name='Word2Vec')

pf_input = Input(shape=(self.steps, self.steps, 2), name='PositionFeatures')

lexical_level_input = Input(shape=(self.steps, 6), name='LexicalLevelFeatures')

event_words_mask_input = Input(shape=(self.steps, 3, self.steps), name='EventWordsMask')

cwf_emb = Embedding(self.embedding_matrix.shape[0],

self.embedding_matrix.shape[1],

embeddings_initializer=Constant(self.embedding_matrix),

input_length=self.steps,

trainable=False, name='cwf_embedding')(cwf_input)

cwf_repeat = Lambda(lambda x: K.repeat_elements(x[:, None, :, :], rep=self.steps, axis=1),

name='max_sequence_repeat')(cwf_emb)

pf_emb = TimeDistributed(Dense(self.pf_dim), name='pf_embedding')(pf_input)

sentence_level = Concatenate(name='SentenceLevel')([cwf_repeat, pf_emb])

sentence_masks = []

for i in range(3):

sentence_mask = Lambda(

lambda x: K.expand_dims(x[0][:, :, i, :], axis=-1) * x[1],

name='mask{}'.format(i))([event_words_mask_input, sentence_level])

sentence_mask_reshape = Lambda(lambda x: K.expand_dims(x, axis=2),

name='sentence_mask_reshape{}'.format(i))(sentence_mask)

sentence_masks.append(sentence_mask_reshape)

sentence = Concatenate(name='SentenceLevelMask', axis=2)(sentence_masks)

conv = TimeDistributed(

TimeDistributed(Conv1D(filters=self.filters, kernel_size=self.window, activation='relu')),

name='conv')(sentence)

conv_pool = TimeDistributed(

TimeDistributed(MaxPooling1D(self.steps - self.window + 1)),

name='max_pooling')(conv)

conv_flatten = TimeDistributed(Flatten(), name='flatten')(conv_pool)

cnn = TimeDistributed(Dropout(0.5), name='dropout')(conv_flatten)

lexical_level_embeddings = []

for i in range(6):

lexical_level_emb = TimeDistributed(

Lambda(lambda x: self.get_embedding(x[:, i])),

name='LexicalEmbedding{}'.format(i))(lexical_level_input)

lexical_level_embeddings.append(lexical_level_emb)

lexical_level = Concatenate(name='LexicalLevel')(lexical_level_embeddings)

fusion = Concatenate(name='LexicalAndSentence')([cnn, lexical_level])

dense = TimeDistributed(

Dense(32, activation='relu', kernel_regularizer=l2(self.l2_param)),

name='fc')(fusion)

output = TimeDistributed(

Dense(self.output, activation='softmax', kernel_regularizer=l2(self.l2_param)),

name='output')(dense)

model = Model(inputs=[cwf_input, pf_input, lexical_level_input, event_words_mask_input], outputs=output)

return model

def get_embedding(self, x):

x = K.expand_dims(x, axis=-1)

emb = Embedding(self.embedding_matrix.shape[0],

self.embedding_matrix.shape[1],

embeddings_initializer=Constant(self.embedding_matrix),

input_length=1,

trainable=False)(x)

flat = Flatten()(emb)

return flat

|